Restoring touch for amputees using a touch-sensitive prosthetic hand

October 15, 2013

New

research at the University of Chicago is laying the groundwork for

touch-sensitive prosthetic limbs that one day could convey real-time

sensory information to amputees via a direct interface with the brain

(credit: PNAS)

The research, published in the Proceedings of the National Academy of Sciences (open access) marks an important step toward new technology that, if implemented successfully, would increase the dexterity and clinical viability of robotic prosthetic limbs.

“To restore sensory motor function of an arm, you not only have to replace the motor signals that the brain sends to the arm to move it around, but you also have to replace the sensory signals that the arm sends back to the brain,” said the study’s senior author, Sliman Bensmaia, PhD, assistant professor in the Department of Organismal Biology and Anatomy at the University of Chicago.

“We think the key is to invoke what we know about how the brain of the intact organism processes sensory information, and then try to reproduce these patterns of neural activity through stimulation of the brain.”

Revolutionizing prosthetics

Bensmaia’s research is part of Revolutionizing Prosthetics, a multi-year Defense Advanced Research Projects Agency (DARPA) project that seeks to create a modular, artificial upper limb that will restore natural motor control and sensation in amputees. Managed by the Johns Hopkins University Applied Physics Laboratory, the project has brought together an interdisciplinary team of experts from academic institutions, government agencies and private companies.

Bensmaia and his colleagues at the University of Chicago are working specifically on the sensory aspects of these limbs. In a series of experiments with monkeys, whose sensory systems closely resemble those of humans, they identified patterns of neural activity that occur during natural object manipulation and then successfully induced these patterns through artificial means.

- The first set of experiments focused on contact location, or sensing where the skin has been touched. The animals were trained to identify several patterns of physical contact with their fingers. Researchers then connected electrodes to areas of the brain corresponding to each finger and replaced physical touches with electrical stimuli delivered to the appropriate areas of the brain. The result: The animals responded the same way to artificial stimulation as they did to physical contact.

- Next the researchers focused on the sensation of pressure. In this case, they developed an algorithm to generate the appropriate amount of electrical current to elicit a sensation of pressure. Again, the animals’ response was the same whether the stimuli were felt through their fingers or through artificial means.

- Finally, Bensmaia and his colleagues studied the sensation of contact events. When the hand first touches or releases an object, it produces a burst of activity in the brain. Again, the researchers established that these bursts of brain activity can be mimicked through electrical stimulation.

[+]

The result of these experiments is a set of instructions that can be

incorporated into a robotic prosthetic arm to provide sensory feedback

to the brain through a neural interface. Bensmaia believes such feedback

will bring these devices closer to being tested in human clinical

trials.

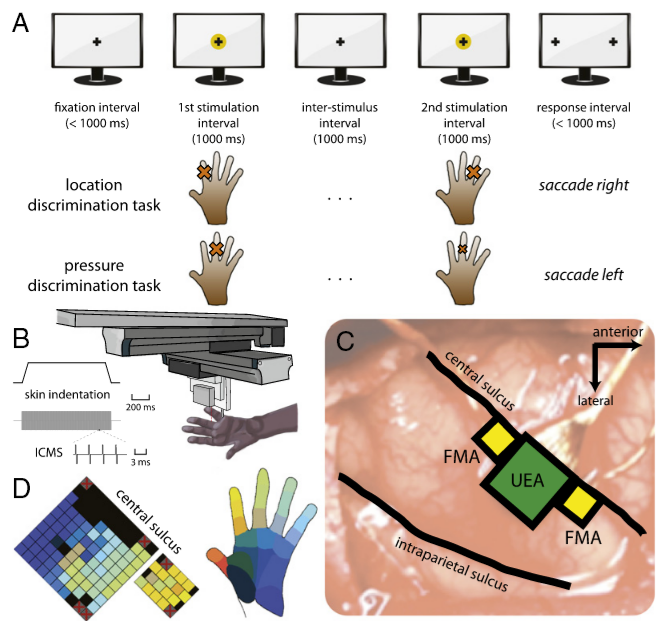

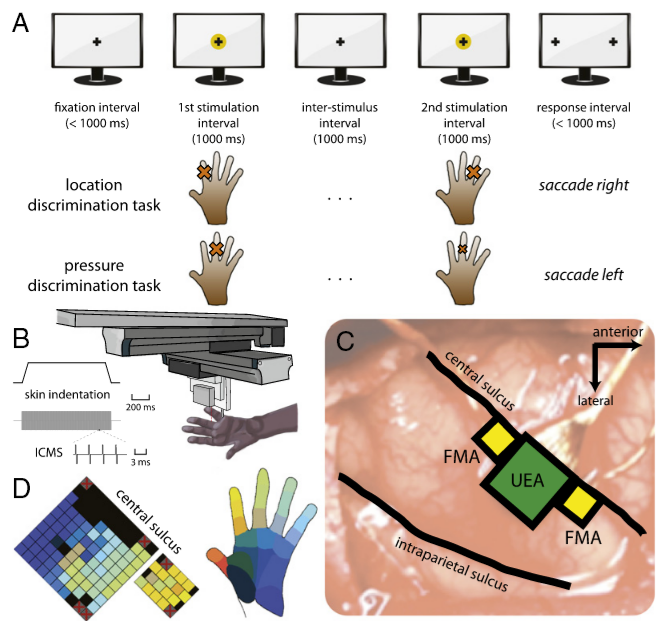

Experimental

design. (A) Upper Trial structure for all of the behavioral tasks: The

cross is a fixation target or a response target, and the yellow circles

indicate the two stimulus intervals. Lower: One example trial each for

the location discrimination and the pressure discrimination task. The

size of the cross is proportional to the depth of indentation. (B)

Depiction of the triaxial indenting stimulator. (C) Electrode implants

in brain areas of one of the three animals (D) Map of the implant area

and corresponding hand locations. (Credit: Gregg A. Tabot et al./ PNAS)

“The algorithms to decipher motor signals have come quite a long way, where you can now control arms with seven degrees of freedom. It’s very sophisticated. But I think there’s a strong argument to be made that they will not be clinically viable until the sensory feedback is incorporated,” Bensmaia said. “When it is, the functionality of these limbs will increase substantially.”

The Defense Advanced Research Projects Agency, National Science Foundation and National Institutes of Health funded this study. Additional authors include Gregg Tabot, John Dammann, Joshua Berg and Jessica Boback from the University of Chicago; and Francesco Tenore and R. Jacob Vogelstein from the Johns Hopkins University Applied Physics Laboratory.

Abstract of Proceedings of the National Academy of Sciences paper

Our ability to manipulate objects dexterously relies fundamentally on sensory signals originating from the hand. To restore motor function with upper-limb neuroprostheses requires that somatosensory feedback be provided to the tetraplegic patient or amputee. Given the complexity of state-of-the-art prosthetic limbs and, thus, the huge state space they can traverse, it is desirable to minimize the need for the patient to learn associations between events impinging on the limb and arbitrary sensations. Accordingly, we have developed approaches to intuitively convey sensory information that is critical for object manipulation — information about contact location, pressure, and timing — through intracortical microstimulation of primary somatosensory cortex. In experiments with nonhuman primates, we show that we can elicit percepts that are projected to a localized patch of skin and that track the pressure exerted on the skin. In a real-time application, we demonstrate that animals can perform a tactile discrimination task equally well whether mechanical stimuli are delivered to their native fingers or to a prosthetic one. Finally, we propose that the timing of contact events can be signaled through phasic intracortical microstimulation at the onset and offset of object contact that mimics the ubiquitous on and off responses observed in primary somatosensory cortex to complement slowly varying pressure-related feedback. We anticipate that the proposed biomimetic feedback will considerably increase the dexterity and embodiment of upper-limb neuroprostheses and will constitute an important step in restoring touch to individuals who have lost it.

(¯`*• Global Source and/or more resources at http://goo.gl/zvSV7 │ www.Future-Observatory.blogspot.com and on LinkeIn Group's "Becoming Aware of the Futures" at http://goo.gl/8qKBbK │ @SciCzar │ Point of Contact: www.linkedin.com/in/AndresAgostini

Washington

Washington